IMPLEMENTING NEWSLETTER SUBSCRIPTION FOR YOUR GATSBY WEBSITE

TLDR; You can implement your own email subscription service (almost) for free. In this post, I’m going to implement one and tell you more about AWS (Amazon Web Services). For more details, keep reading.

Welcome to this post. Do you want your own newsletter subscription for your page? You are in the right place. Not only this post gives you a tutorial for implementing a subscription, it gives you also a small introduction to a few AWS services - API Gateway, Lambda and DynamoDB.

WHY DO YOU NEED TO GATHER EMAILS FROM YOUR CUSTOMERS?

According to Emarsys study, businesses under $100M revenue claim Email marketing to be the top method of gaining a customer retention and acquisition. When it comes to retention and acquisition, 80% businesses relies on email marketing. Other top methods are: Organic search, Paid Search and Social media.

Whether you are business or not, email marketing seems to take a huge portion of a pie. Why would you omit something, that has the greatest potential to attract people to your site? In the end, people who are interested in your content most likely doesn’t bookmark your site and if so - bookmark doesn’t notify you about new juicy content you produce. You want your audience to know when a new content comes out.

Email marketing is so good because people who subscribed like your content and want to see more in the future. So the chance they will get on your site again through email is high - because they liked your content in the past, so they may in the future - this is what they hoped when they were typing that email in the subscribe form. So don’t mess it up.

EXISTING SOLUTIONS

Just google it. You may find many platforms providing services such as email lists, designing emails, analytics, A/B testing and a lot more. However, one down side is the price that you pay for the serice. At some providers, I was shocked how much money they asked for the service. In most cases, you are paying more when you have more subscribed emails. The others, as I saw, limited you by the number of sent emails - which is also not satisfying option.

However, as I saw the numbers, I really didn’t understand how can they charge so much for so few subscribers. The most popular one, MailChimp, with its monkey logo, offers a free plan up to 2000 subscribers. The higher plan allows up to 50 thousands emails subscribed and it costs $10 a month.

Actually, the MailChimp has the most reasonable and for me cheapest plans among the other services I was searching around.

WHEN YOU DON’T WANT TO PAY THE PRICE

For a lot of people it is a viable option. When they don’t know how to code and all they want is to store emails and gettings work done with them - it is absolutely fine and for example $10 a month is very reasonable price to pay for.

But… I’m coder and I don’t like the third party solution. Why? Because the service is limiting me with number of subscriptions. What if I wanted have a simple list of emails and no other services like A/B testing and analytics? I understand that other services are valuable too and can be useful - but not for everyone. The most valuable thing for the owner of a website is, when he acquire a visitor’s email.

In the example I will show you, how I can store up to 100k emails and pay exactly zero dollars.

INTRODUCTION TO SERVERLESS (AWS Lambda and DynamoDB)

If we want to implement our solution, we need some provider to run and execute our code somewhere. As the title could tell you, I’m going to pick AWS (Amazon Web Services) and show you how to do it.

We could run a virtual machine EC2 in amazon and run all things needed to execute our code and so on. This is often not a viable option, because you pay for the time the unit is running. So if it is running all day all night, well, you pay for that time too.

What is Serverless? Serverless is the kind of opposite from the EC2. Because when you don’t need it, it will not run. And you pay only for the time and execution times. Plus, you don’t need to bother with machine or servers, or some crashes in your virtual unit and so on. So the big advantage here is to focus on code and on business logic.

One of the serverless services at the AWS is Lambda. Lambda is a function. When a trigger occurs, the lambda will be executed. Triggers can be for example request from API Gateway (Service which allows you to send requests to AWS), message queues or time trigger (triggered frequently), changed data in databases (such as DynamoDB) or changed files in S3 (data storage) and others. This function, when invoked, will execute, do the job and will send some data back or do some other things - then its life ends.

So if the function was invoked 0 times, you pay nothing. You’d expect that you’re going to pay something for a few executions - but no, you still pay nothing for a few thousands executions. If you are new to AWS - you have first year so called “Free Tier” that allows you to have a lot more free limits than Non free tiers. With Free Tier you have 1M lambda requests. Then, it depends from the execution time and memory which your lambda needed for an execution. Rule of a thumb is that if you have less than one million requests, you’re not going to pay much.

DynamoDB - DynamoDB is a serverless NoSQL key-value database which allows you to store endless amount of data. It is scalable and fast. And as well as lambda, you pay only for what you use. In this case, you pay for the amount of requests to the DynamoDB and the amount of stored data. I’m not going to write about other factors like data input and output (between zones) and other factors because they are not very relevant to us for now.

I’m going to tell you what the mentioned keywords above actually means: NoSQL - This is the modern keyword for horizontally scalable database systems primarily not using SQL as their query mechanism. NoSQL and SQL is like Jing and Yang. They pretty much complement each other. SQL is not as scalable as NoSQL. SQL is not flexible as NoSQL. On the other side, flexibility is not always desired (for example at bank institutions). SQL language allows you to query almost anything. It is probably the most powerful query language that exists. You can aggregate, join, count, make advanced stats and so on. NoSQL databases are limited at querying but as I mentioned, they are good at different things.

Key-Value: This is just sub-part of NoSQL world (there are many other database systems row-oriented, column-oriented and so on). Key-Value means, that you can query your data by key. Key -> Value. You can’t query directly data as in SQL for example.

Example: GET user with id 1234. GET(1234) => { User: … }… But as it is, you can’t query all users (or if you could, it’d be very inefficient). Whether in SQL, you can do it like this SELECT * FROM users;.

API Gateway - The next service which allows you to have REST endpoints and query them from anywhere. It gives you open url and you can request to this endpoint. The service alone is pretty useless, because it only useful when combined with other service. It redirects your requests to these services. You can define endpoints here, manage and transform requests and responses, mock responses and so many other things I’m not going to describe here. What you need to know is, that it provides you endpoint, which you can invoke in your browser - some https://… endpoint.

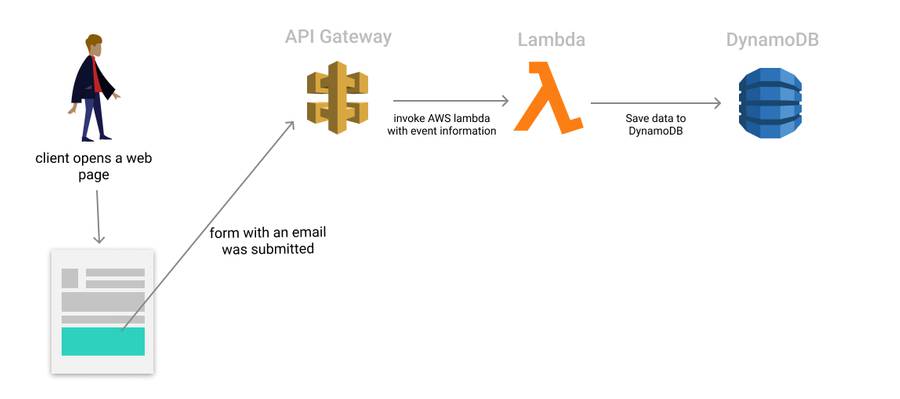

ARCHITECTURE

I tried to make the architecture as simple as possible. First the user opens your site, then he eventually fill the form and send it to the url endpoint in API Gateway service. The request will go through Lambda where we can do some logic such as control if email is valid, check if email already exists in database, send various responses and so on. At the end of the request path, DynamoDB holds all our user data.

HANDS ON

Sign in to the AWS console. If you don’t have an account, then create one. I should warn you that the registration also requires to establish a valid payment method - you need to provide information about your credit card. Eventual payments will be billed monthly. You are not going to be charged for anything yet, because you have no services and no users using your service.

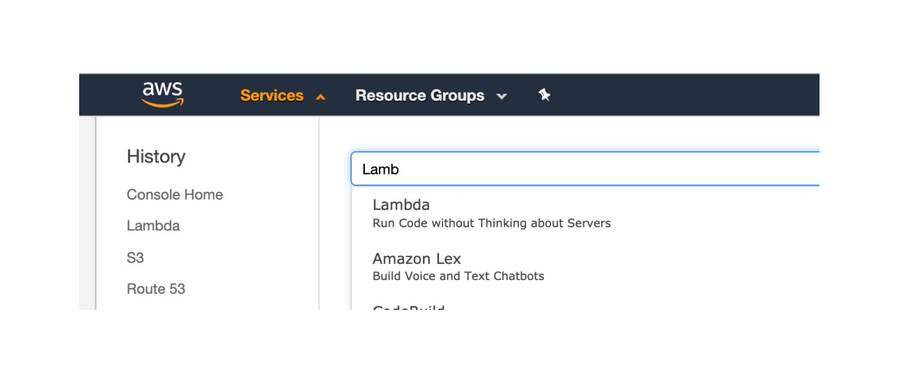

After you have successfuly logged in, you can see search bar. If you can’t see it, on the top of the page you should see section “Services”. Then you can search for Lambda service.

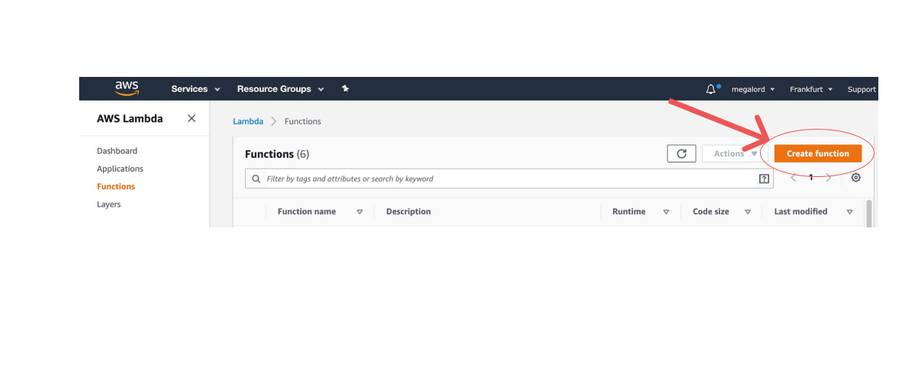

Cool, now create a function.

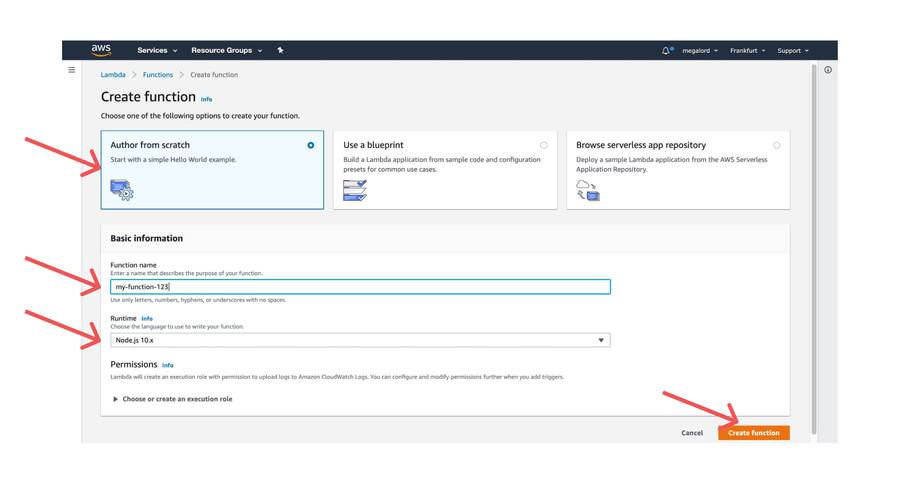

We want to write a new function from scratch, we called it something like my-function-123 and we will write our server function in nodejs version 10. Click on the Create and wait for the function to be created.

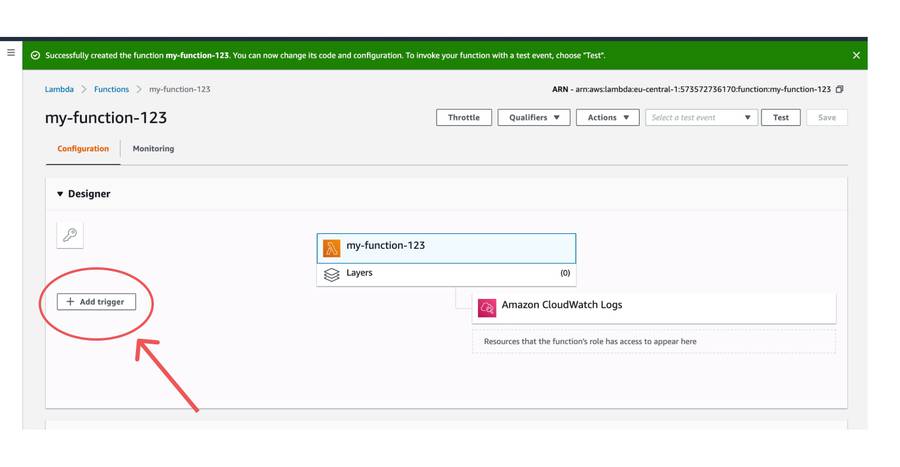

Congratulation. You have created your first lambda function. As I mentioned above, lambda function is invoked by a trigger. In this case, we want to trigger our function with API request.

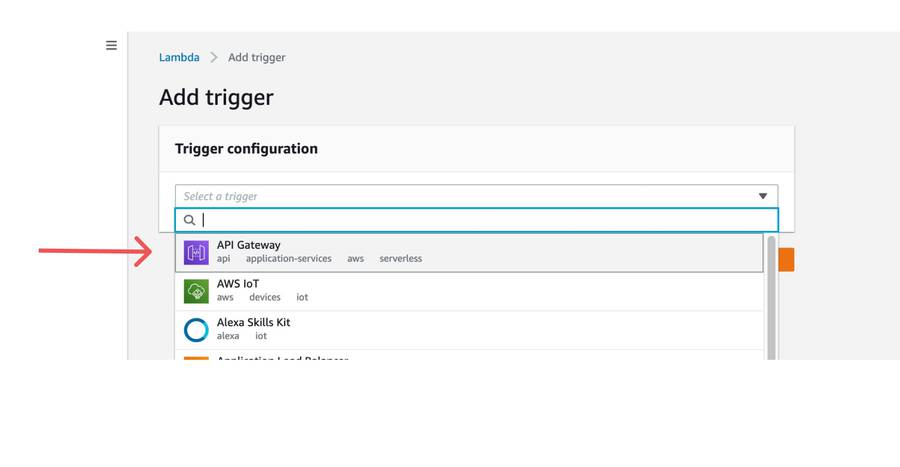

Choose API Gateway from the list of options.

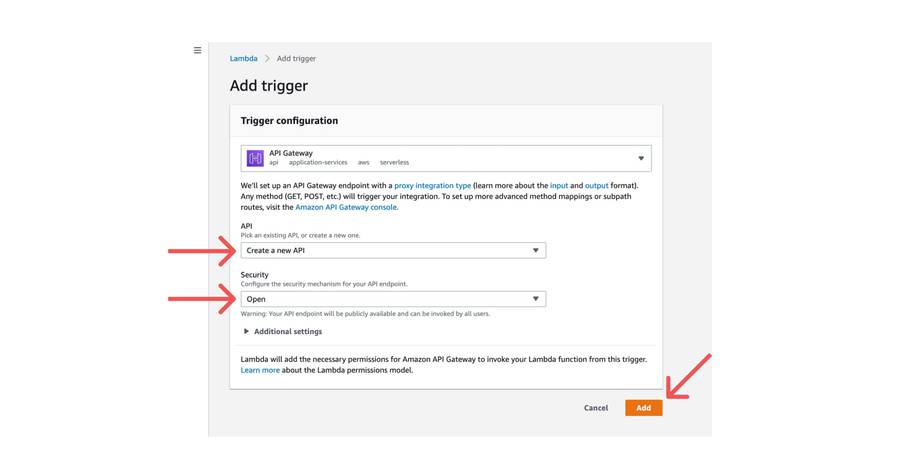

You can use an existing API to invoke a lambda. In this case, we want to Create a new API with Open security option.

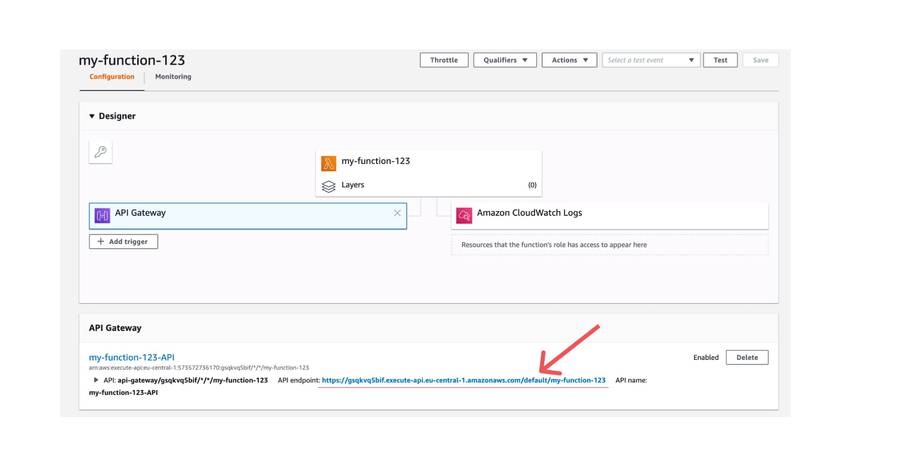

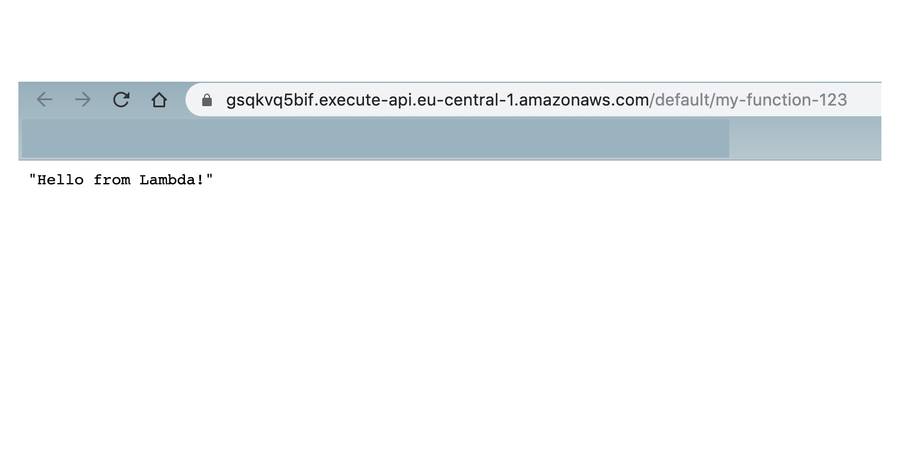

Excellent. Now you have API endpoint as shown on the image and you can test the url if it works.

You clicked on the link. The GET request came through API Gateway, then it was redirected to Lambda and finally, lambda returned response back to API gateway, which sends a response back to user. In this case, we obtained a message “Hello from Lambda” which is some default dummy implementation shipping with a scratch lambda solution.

Lambda + DynamoDB

Now the more advanced part. You need to write some code to your Lambda. How can you do that? Basically, you have 3 options here:

- write code right in the aws editor (used for very small scripts)

- upload zip file

- upload zip file from S3

Two last options are used when your code is bigger and includes other dependencies, like nodemodules_ for example.

I’m going to do this the second way - by uploading a zip file through terminal command aws-cli. Yes, you can work and communicate with aws console through aws-cli

To continue, you will need aws-cli. Install with pip3:

$ pip3 install awscli --upgrade --userIf you don’t have python, or it doesn’t work that way for you, please read full documentation on how to install aws-cli properly.

You successfuly installed aws-cli. Now, in order to establish connection between aws-cli and your aws account, you need to create a pair of keys - access and secret key in you web aws-console. To see how to fully configure your aws-cli, see Quickly Configuring the AWS CLI

All you need is to go to Account Credentials, generate two keys - access and secret key, then configure aws-cli with these keys, for Example:

$ aws configure

AWS Access Key ID [None]: DUMMY_ACCESS_KEY

AWS Secret Access Key [None]: DUMMY_SECRET_KEY

Default region name [None]: us-west-2

Default output format [None]: jsonI am not going more into details, you can do it. I believe in you.

Next step:

Create new directory with name my-function-123.

Initialize npm directory in it and install aws-sdk npm package:

# get into your project directory

$ cd my-function-123

# initialize project

$ npm init

# install aws-sdk for interacting with aws services inside your node application

$ npm install --save aws-sdkCool, now you should have:

- configured aws-cli in your terminal

- created your npm project directory

- installed aws-sdk

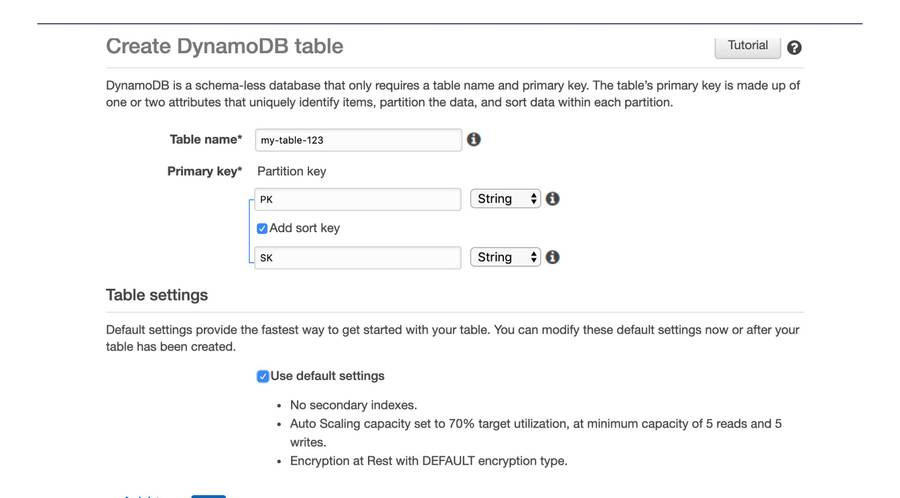

CREATE DYNAMODB TABLE

- Search for DynamoDB service in your aws console.

- Now you see one blue button “Create Table”. Click on that

- Set your table name to whatever name you want. For example “my-table”

- Check option with Sort key, it could be handy to have one.

- Set primary key name to “PK” and secondary key name to “SK”.

- (OPTIONAL): If you want, uncheck default settings and set capacity units for your needs

Read/Write capacity units are units determining how many write or read requests can your database handle. Then you pay exactly for these units. 1 Read Capacity Unit, for example, means that your table can handle one eventually consistent read request per second. 1 Consistent read requires 2 capacity units. With these factors in mind, you should know how many units do you need for running your tables. Also note, that amazon is doing autoscaling for you, it means, that it automatically rises capacity units when your traffic rises (throughout a day) and lowers when there is no traffic (for example at night).

PK - is the partitioning key. You’re going to use this key to access your data. SK - is the secondary key (or sort key). It can keep other secondary keys under one partitioning key.

It is very important to diversify PK as much as possible. This is more relevant at high traffic websites where access to a database is very frequent. If you would access one PK (one partition) very frequently, it could lead to some problems, I’m not going to further describing these problems because subscribing to newsletter doesn’t seem to me like high traffic problem (user subscribes and doesn’t interacting with our endpoint).

So far we have got:

- Created API Gateway

- Established Lambda function (without code)

- Created DynamoDB Table

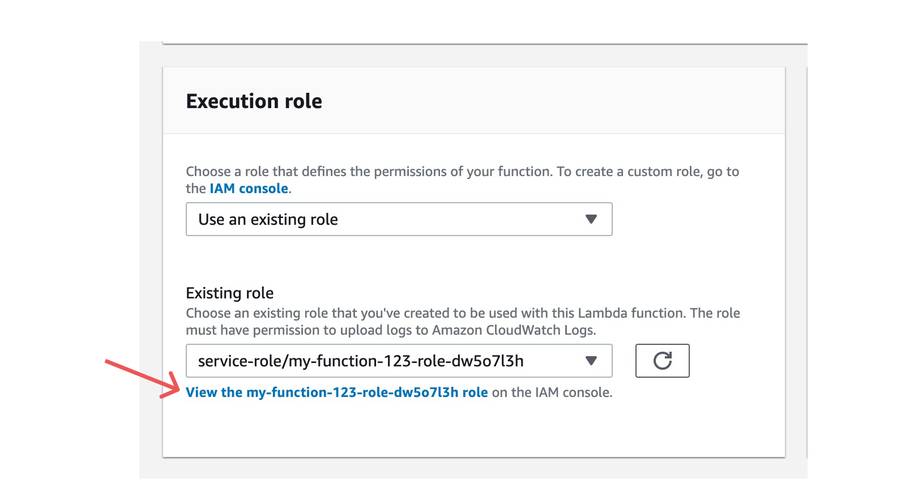

One more thing we need to do between lambda and dynamoDB is to allow access of lambda to read and write to the dynamoDB. Go to your function on aws console and you can see execution role section:

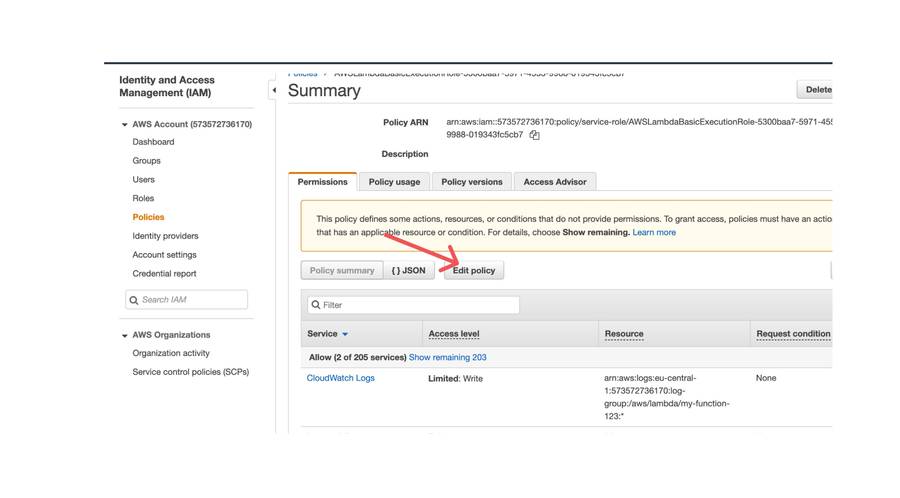

- Go to console lambda page and scroll down and find execution role section and click on the link shown on the image.

- Click on execution role link

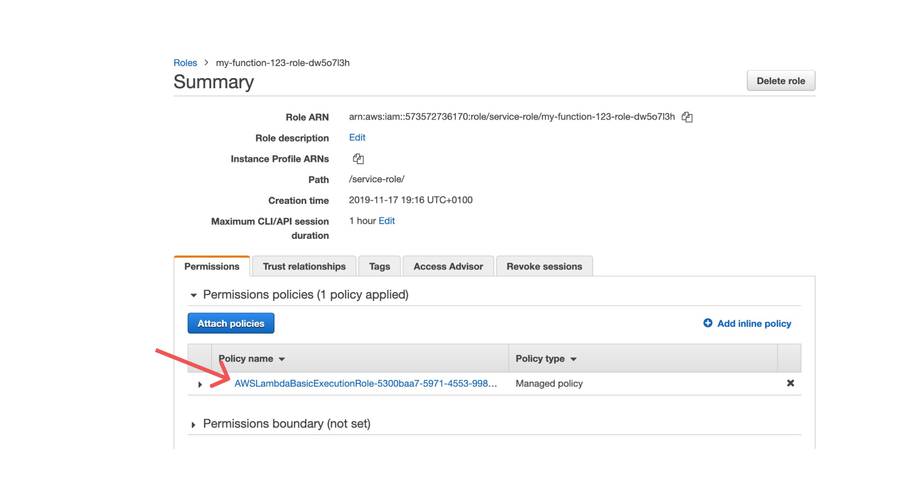

- Click on Edit policy

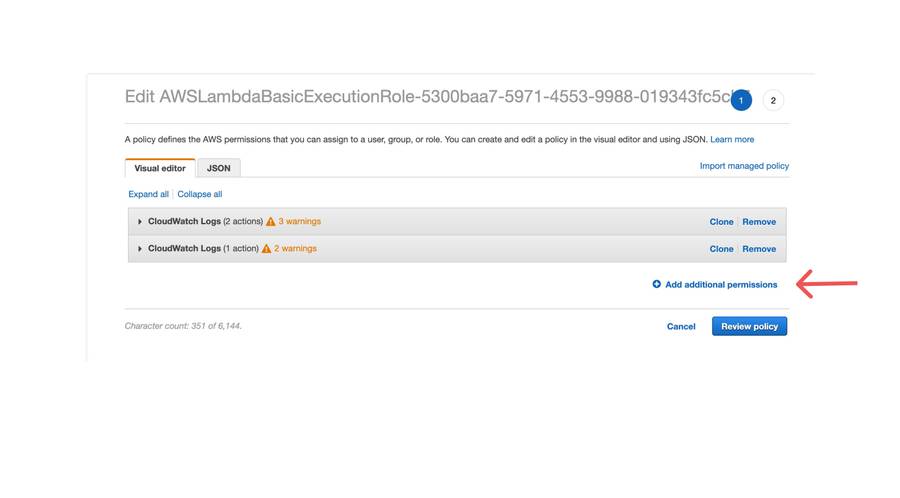

- Click on Add additional permissions

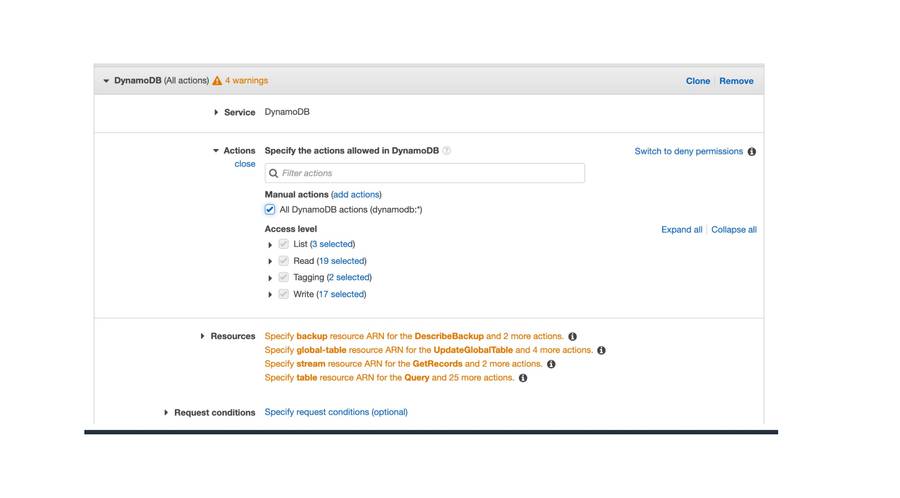

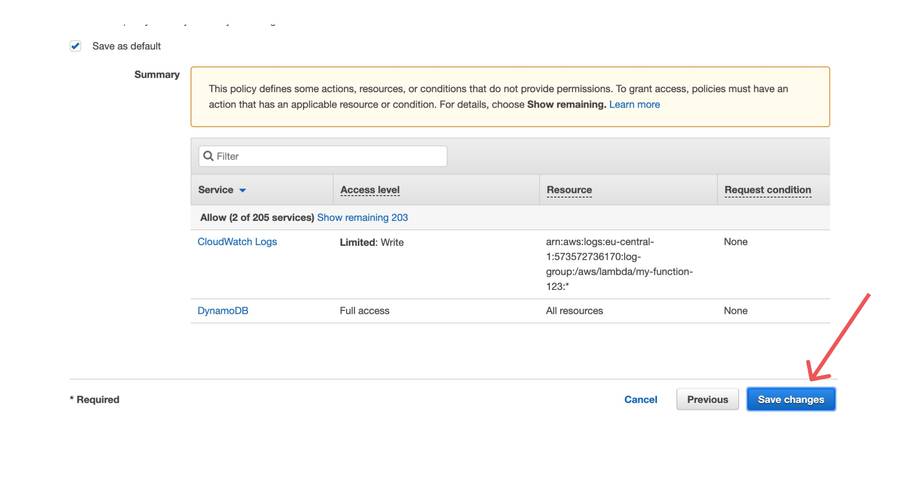

- Allow all + click on Resources and also allow all

- Save changes

Now we need to write some logic to the lambda function.

Locally in your created npm directory, create index.js file.

// Load the AWS SDK for Node.js

var AWS = require("aws-sdk");

// Set the region

AWS.config.update({ region: "eu-central-1" });

// Create the DynamoDB service object

var ddb = new AWS.DynamoDB();

const emailRegex = /^(([^<>()\[\]\\.,;:\s@"]+(\.[^<>()\[\]\\.,;:\s@"]+)*)|(".+"))@((\[[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}])|(([a-zA-Z\-0-9]+\.)+[a-zA-Z]{2,}))$/;

const handler = async event => {

const { body } = event;

const { email = null, name = null } = JSON.parse(body);

// if email is missing, return error message

if (email === null) {

return {

statusCode: 400,

body: JSON.stringify({

error: "Email not provided"

}),

headers: {

"Content-type": "application/json"

}

};

}

// check if email is correct

if (!emailRegex.test(email)) {

return {

statusCode: 400,

body: JSON.stringify({

error: "Not valid email"

}),

headers: {

"Content-type": "application/json"

}

};

}

// Call DynamoDB to create the table

const dbPromise = new Promise((resolve, reject) => {

ddb.putItem(

{

TableName: "my-table-123",

Item: {

PK: { S: "emails-0" },

SK: { S: email },

name: name ? { S: name } : { NULL: true }

}

},

(err, data) => {

if (err) {

reject(err);

}

resolve(data);

}

);

});

// resolve promise to dynamoDB

return dbPromise

.then(() => ({

statusCode: 200,

body: JSON.stringify({

email,

message: "Email successfuly saved"

})

}))

.catch(err => {

console.error(err);

return {

statusCode: 500,

body: JSON.stringify({

message: "Something went wrong"

})

};

});

};

module.exports = {

handler

};This lambda code:

- checks if email is in BODY

- checks if email is body (with help of regex)

- saves email in PK

emails-0with (optional) name - if it is presented in a body

I gave the partition the name emails-0 and gradually we will save our emails as sort keys. Why emails-0? As I mentioned earlier, it is not always good idea to design the table for saving all data to one partition. If traffic will suddenly blow up, we can do for example create more than one partition and randomly throw emails into random partitions (for example one to 10) - then there is small chance that one partition will be “hot” or overloaded with requests. Another good idea is to partition by starting characters of an email: emails-{$CHAR} where $CHAR is between 0-9 to a-zA-Z.

Now, when we have our code done, we need to write a little script which sends our code to aws servers.

Create a new file with name upload_script.sh

#! /bin/bash

# zip all build directory and node_modules

# -r stands for recursive to all subdirectories

# -1 stands for fast compression

zip -r -1 zipped.zip build# CHANGE my-function-123 to the name of your function

aws lambda update-function-code --function-name my-function-123 --zip-file fileb://zipped.zip

# Remove zip - this prevent to be zipped second time at the second execution and so on

rm zipped.zipSave it and set chmod to

$ chmod 777 ./upload_script.shFinal step is to run this script, the script will:

- zip all files in the directory with nodemodules and javascript files. And yes, you need also nodemodules.

- aws command (from aws-cli) uploads the code to the cloud

- remove zip file after upload is done.

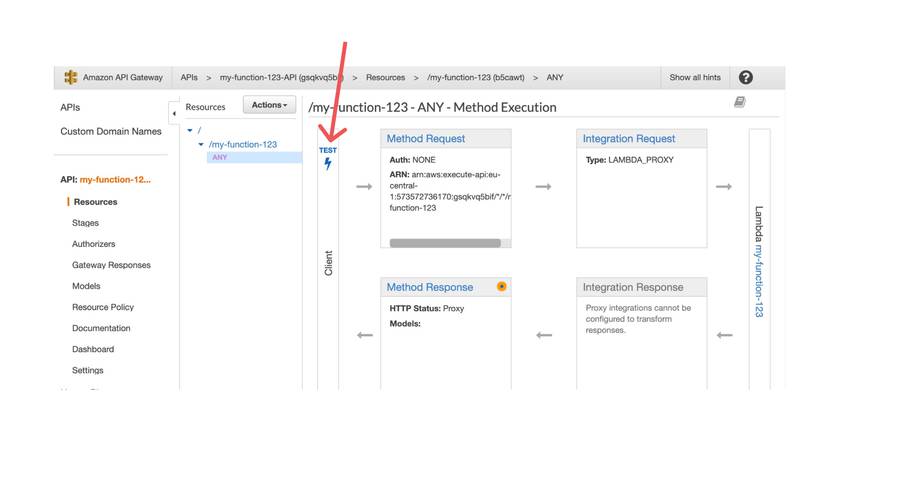

Let’s test it. Go to the API Gateway service and click on the Test:

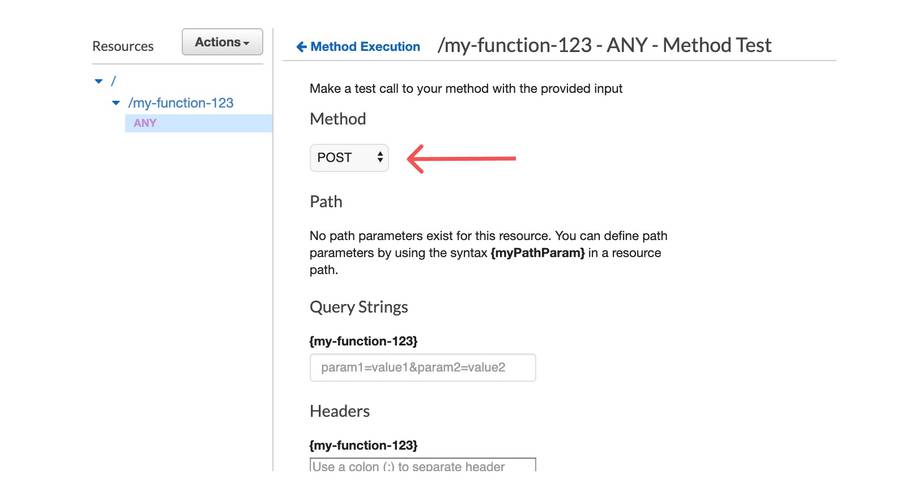

Set method to POST in order to have fill body with data.

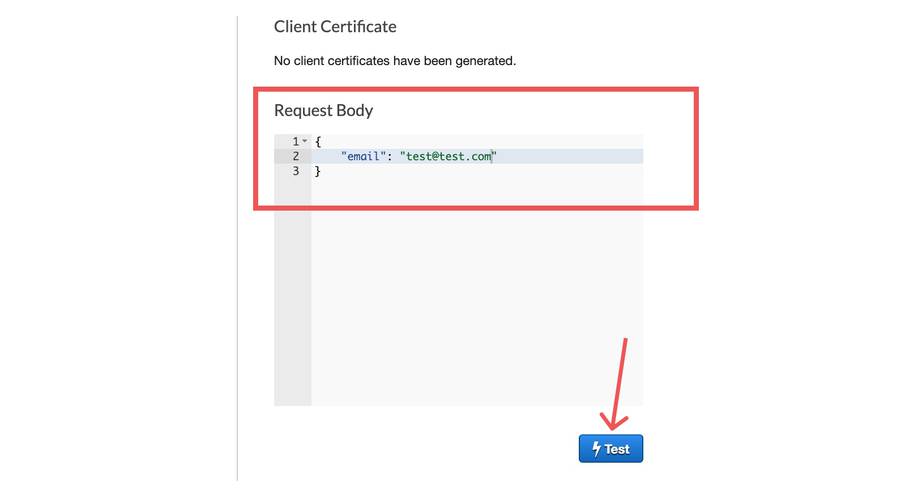

Fill body with json which contains attribute email with our test email.

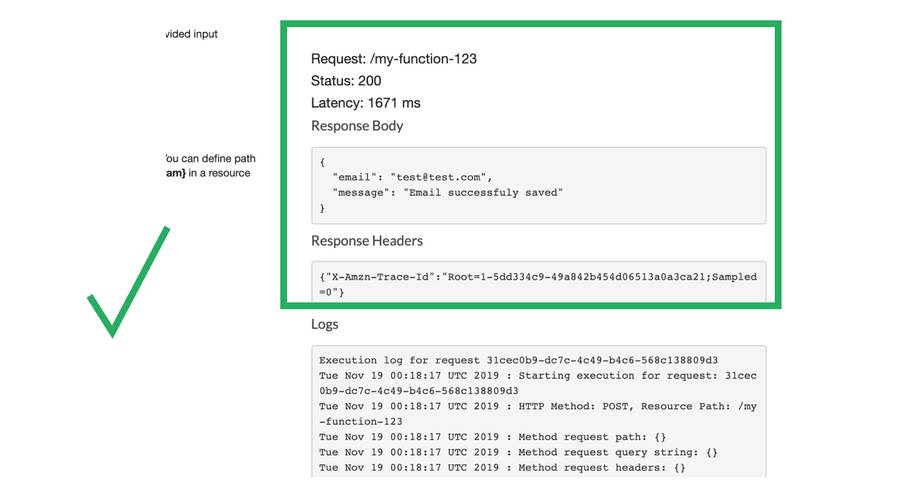

Finally, we see success!

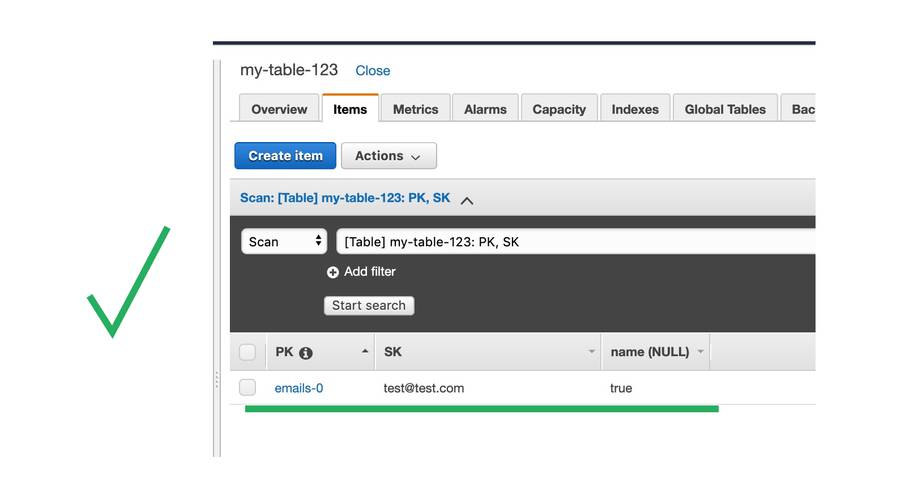

We can check our dynamoDB database if the item is really there:

Improvement

In the next part I’m planning to make a basic form attached to this endpoint and test if it really works :).

If you see any error, bug or want to improve the post then please leave a comment below. I will read it and give you credits for that. Also, if you see any english grammar mistakes, please let me know as well - english is not my native language. Preparing such a long tutorial isn’t easy at all. I hope you enjoyed it. Thanks.

You may also like

Lucinda #1 - Introduction

Let me introduce Lucinda. My side personal project solving project management issues and time tracking. What is it all about It all started…

How Should One Cut His Forest In Order to Last Him Forever

This is Jack. Jack is a man living in a cabin inside a small forest. He knows how to cut trees and how to take care of his small cabin. He…

How To Use Redux Without Losing Your Mind - Part II

In the last part How To Use Redux Without Losing Your Mind I’ve presented the new way of using redux libary. The main reason behind…